Science is supposed to be the domain of testable (and thus falsifiable) claims the evidence for which is a body of empirical studies that have stood the scrutiny of reproducibility. With the advent of computers that method of science seems to be growing increasingly passé. Why, we don’t need to bother actually doing the grunt work of experimentation, we can just sit back, press a button and let a computer model tell us the results. Of course the fault lies not in our machines but in ourselves (with apologies to the Bard). Computers are but tools that make some of that grunt work easier. But computer models are not so infallible that their output should solely be relied upon. They are imperfect not due to some failing of the technology but rather because they can only do what we tell them, and we humans are far from perfect or omniscient.

The flaw in computer models is two-fold: assumptions and unknowns. Assumptions are made about the contributions of certain factors and those assumptions are often wrong or even if close to being right can still introduces tremendous variability in outcomes from small differences in input. Unknown unknowns are an even greater contributor to the phenomenon known as “garbage in – garbage out” of modeling. We can’t account for the contribution of something we don’t even know exists.

Models are supposed to be part of an iterative process where you do the actual experiment, compare the results to your model’s output and then modify your model. To test the model you then change some of the variables and see how well it holds up in comparison to “real world” results. But, as soon as a new variable is introduced or a new unknown comes into play, then the model’s usefulness must be called into question.

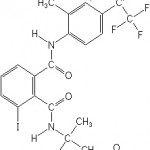

Now by this point you might think I’m going to delve into an indictment of the climate models poor record of prediction but actually I’d actually like to talk about nuts. Or rather how we should all expect the price of nuts along with a host of other crops (pistachios, almonds, soybeans, tobacco, peanuts, cotton, lettuce, alfalfa, tomatoes, watermelon and bell peppers) to increase in price in the coming years due to the EPA banning a pesticide known as flubendiamide. EPA determined that flubendiamide could break down in the environment and potentially cause harm to a few aquatic species. Ok, sounds like some dangerous stuff, fair enough. But, it turns out this alleged harm is not based on empirical studies but is rather based on computer models that attempt to predict toxicology – “predictive toxicology” they call it. BayerCropScience, the manufacturer of flubendiamide, went on record stating that such models “exaggerate environmental risk.” Well imagine that, a computer model overstates the likelihood of a deleterious outcome in order to justify governmental intrusion into the market. Although science cannot be manipulated to service political interests, models surely can – click, click, here comes the desired result.

This ultimately is the true danger of such models. It is one thing if scientists want to put all their faith in such models, the worst that can happen is that eventually someone is made to look the fool when actual empirical studies prove them wrong. However it is far more dangerous if the cart is pushing the public policy horse by having bureaucrats and our supposed intellectual superiors run our lives and then justify their actions by pointing at selectively funded model-based “research” that can be tweaked to magically provide an outcome that conforms with the policy prescriptions desired. All that is needed to shut down debate is to claim “it’s science” and that it is “settled.”